资源简介

本文是依赖jieba分词构成中文字典,写成的中文聊天机器人,本文是依赖jieba分词构成中文字典,写成的中文聊天机器人,本文是依赖jieba分词构成中文字典,写成的中文聊天机器人

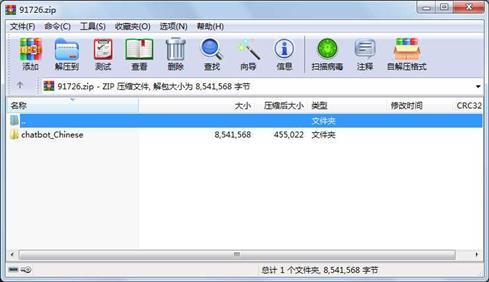

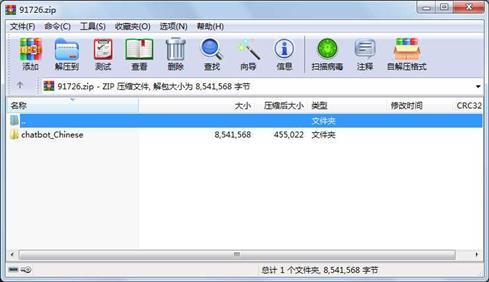

代码片段和文件信息

# -*- coding:utf-8 -*-

# -*- author:zzZ_CMing CSDN address:https://blog.csdn.net/zzZ_CMing

# -*- 2018/07/31;14:23

# -*- python3.5

import sys

import numpy as np

import tensorflow as tf

from tensorflow.contrib.legacy_seq2seq.python.ops import seq2seq

import word_token

import jieba

import random

size = 8 # LSTM神经元size

GO_ID = 1 # 输出序列起始标记

EOS_ID = 2 # 结尾标记

PAD_ID = 0 # 空值填充0

min_freq = 1 # 样本频率超过这个值才会存入词表

epochs = 2000 # 训练次数

batch_num = 1000 # 参与训练的问答对个数

input_seq_len = 25 # 输入序列长度

output_seq_len = 50 # 输出序列长度

init_learning_rate = 0.5 # 初始学习率

wordToken = word_token.WordToken()

# 放在全局的位置,为了动态算出 num_encoder_symbols 和 num_decoder_symbols

max_token_id = wordToken.load_file_list([‘./samples/question‘ ‘./samples/answer‘] min_freq)

num_encoder_symbols = max_token_id + 5

num_decoder_symbols = max_token_id + 5

def get_id_list_from(sentence):

“““

得到分词后的ID

“““

sentence_id_list = []

seg_list = jieba.cut(sentence)

for str in seg_list:

id = wordToken.word2id(str)

if id:

sentence_id_list.append(wordToken.word2id(str))

return sentence_id_list

def get_train_set():

“““

得到训练问答集

“““

global num_encoder_symbols num_decoder_symbols

train_set = []

with open(‘./samples/question‘ ‘r‘ encoding=‘utf-8‘) as question_file:

with open(‘./samples/answer‘ ‘r‘ encoding=‘utf-8‘) as answer_file:

while True:

question = question_file.readline()

answer = answer_file.readline()

if question and answer:

# strip()方法用于移除字符串头尾的字符

question = question.strip()

answer = answer.strip()

# 得到分词ID

question_id_list = get_id_list_from(question)

answer_id_list = get_id_list_from(answer)

if len(question_id_list) > 0 and len(answer_id_list) > 0:

answer_id_list.append(EOS_ID)

train_set.append([question_id_list answer_id_list])

else:

break

return train_set

def get_samples(train_set batch_num):

“““

构造样本数据:传入的train_set是处理好的问答集

batch_num:让train_set训练集里多少问答对参与训练

“““

raw_encoder_input = []

raw_decoder_input = []

if batch_num >= len(train_set):

batch_train_set = train_set

else:

random_start = random.randint(0 len(train_set)-batch_num)

batch_train_set = train_set[random_start:random_start+batch_num]

# 添加起始标记、结束填充

for sample in batch_train_set:

raw_encoder_input.append([PAD_ID] * (input_seq_len - len(sample[0])) + sample[0])

raw_decoder_input.append([GO_ID] + sample[1] + [PAD_ID] * (output_seq_len - len(sample[1]) - 1))

encoder_inputs = []

decoder_inputs = []

target_weights = []

for length_idx in range(input_seq_len):

encoder_i属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2018-07-31 18:09 chatbot_Chinese\.idea\

文件 398 2018-07-31 16:54 chatbot_Chinese\.idea\chatbot_Chinese.iml

文件 185 2018-07-31 16:54 chatbot_Chinese\.idea\misc.xm

文件 282 2018-07-31 16:54 chatbot_Chinese\.idea\modules.xm

文件 11108 2018-07-31 18:09 chatbot_Chinese\.idea\workspace.xm

目录 0 2018-07-31 16:51 chatbot_Chinese\__pycache__\

文件 2138 2018-07-31 15:33 chatbot_Chinese\__pycache__\word_token.cpython-35.pyc

文件 10227 2018-07-31 17:44 chatbot_Chinese\demo_test.py

目录 0 2018-07-31 18:04 chatbot_Chinese\model\

目录 0 2018-07-31 18:04 chatbot_Chinese\model\2000\

文件 67 2018-07-31 18:04 chatbot_Chinese\model\2000\checkpoint

文件 13412 2018-07-31 18:04 chatbot_Chinese\model\2000\demo_.data-00000-of-00001

文件 851 2018-07-31 18:04 chatbot_Chinese\model\2000\demo_.index

文件 8500795 2018-07-31 18:04 chatbot_Chinese\model\2000\demo_.me

目录 0 2018-07-31 18:02 chatbot_Chinese\samples\

文件 83 2018-07-31 18:02 chatbot_Chinese\samples\answer

文件 73 2018-07-31 18:02 chatbot_Chinese\samples\question

文件 1949 2018-07-31 14:51 chatbot_Chinese\word_token.py

- 上一篇:破解网页右键限制代码

- 下一篇:电路故障自动检测 multism 仿真模型

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论