资源简介

传统的SVM模型只能实现单输出,即输入多个特征,返回单一的特征。此代码实现输入多个特征输出多个特征。即多输入多输出SVM模型。

代码片段和文件信息

function RESULTS = assessment(LabelsPreLabelspar)

%

% function RESULTS = assessment(LabelsPreLabelspar)

%

% INPUTS:

%

% Labels : A vector containing the true (actual) labels for a given set of sample.

% PreLabels : A vector containing the estimated (predicted) labels for a given set of sample.

% par : ‘class‘ or ‘regress‘

%

% OUTPUTS: (all contained in struct RESULTS)

%

% ConfusionMatrix: Confusion matrix of the classification process (True labels in columns predictions in rows)

% Kappa : Estimated Cohen‘s Kappa coefficient

% OA : Overall Accuracy

% varKappa : Variance of the estimated Kappa coefficient

% Z : A basic Z-score for significance testing (considering that Kappa is normally distributed)

% CI : Confidence interval at 95% for the estimated Kappa coefficient

% Wilcoxon sign test and McNemar‘s test of significance differences

%

% Gustavo Camps-Valls 2007(c)

% gcamps@uv.es

%

% Formulae in:

% Assessing the Accuracy of Remotely Sensed Data

% by Russell G Congalton Kass Green. CRC Press

%

switch lower(par)

case {‘class‘}

Etiquetas = union(LabelsPreLabels); % Class labels (usually 123.... but can work with text labels)

NumClases = length(Etiquetas); % Number of classes

% Compute confusion matrix

ConfusionMatrix = zeros(NumClases);

for i=1:NumClases

for j=1:NumClases

ConfusionMatrix(ij) = length(find(PreLabels==Etiquetas(i) & Labels==Etiquetas(j)));

end;

end;

% Compute Overall Accuracy and Cohen‘s kappa statistic

n = sum(ConfusionMatrix(:)); % Total number of samples

PA = sum(diag(ConfusionMatrix));

OA = PA/n;

% Estimated Overall Cohen‘s Kappa (suboptimal implementation)

npj = sum(ConfusionMatrix1);

nip = sum(ConfusionMatrix2);

PE = npj*nip;

if (n*PA-PE) == 0 && (n^2-PE) == 0

% Solve indetermination

warning(‘0 divided by 0‘)

Kappa = 1;

else

Kappa = (n*PA-PE)/(n^2-PE);

end

% Cohen‘s Kappa Variance

theta1 = OA;

theta2 = PE/n^2;

theta3 = (nip‘+npj) * diag(ConfusionMatrix) / n^2;

suma4 = 0;

for i=1:NumClases

for j=1:NumClases

suma4 = suma4 + ConfusionMatrix(ij)*(nip(i) + npj(j))^2;

end;

end;

theta4 = suma4/n^3;

varKappa = ( theta1*(1-theta1)/(1-theta2)^2 + 2*(1-theta1)*(2*theta1*theta2-theta3)/(1-theta2)^3 + (1-theta1)^2*(theta4-4*theta2^2)/(1-theta2)^4 )/n;

Z = Kappa/sqrt(varKappa);

CI = [Kappa + 1.96*sqrt(varKappa) Kappa - 1.96*sqrt(varKappa)];

if NumClases==2

% Wilcoxon test at 95% confidence interval

[p1h1] = signrank(LabelsPreLabels);

if h1==0

RESULTS.WilcoxonComment = ‘The null hypothesis of both distributions come from the same median can be rejected at the 5% level.‘;

elseif h1==1

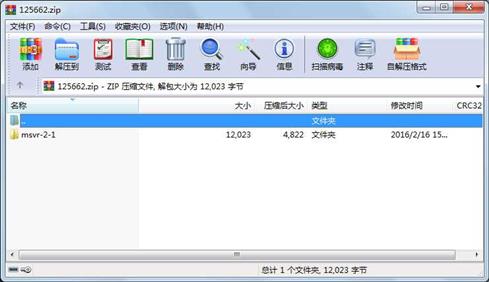

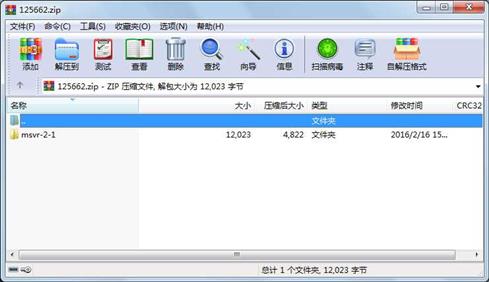

RESULTS.WilcoxonComment = ‘Th属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2016-02-16 07:39 msvr-2-1\

文件 2182 2016-02-16 07:37 msvr-2-1\demoMSVR.m

文件 4689 2010-09-15 09:18 msvr-2-1\assessment.m

文件 1642 2010-09-15 09:18 msvr-2-1\kernelmatrix.m

文件 3312 2016-02-16 07:39 msvr-2-1\msvr.m

文件 198 2010-09-15 09:18 msvr-2-1\scale.m

- 上一篇:外贸业务流程管理软件系统业务说明

- 下一篇:LM567的应用及红外壁障电路

相关资源

- ppt 机器学习.ppt

- Logistic回归总结非常好的机器学习总结

- Convex Analysis and Optimization (Bertsekas

- 基于蚁群算法优化SVM的瓦斯涌出量预

- 基于模糊聚类和SVM的瓦斯涌出量预测

- 机器学习个人笔记完整版v5.2-A4打印版

- 基于CAN总线与ZigBee的瓦斯实时监测及

- JUNIOR:粒子物理学中无监督机器学习

- 语料库.zip

- SVM-Light资料,使用说明

- 果蝇算法融合SVM的开采沉陷预测模型

- 中国科学技术大学 研究生课程 机器学

- 遗传算法越野小车unity5.5

- BoW|Pyramid BoW+SVM进行图像分类

- 吴恩达机器学习编程题

- shape_predictor_68_face_landmarks.dat.bz2 68个标

- 基于libsvm的图像分割代码

- 机器学习实战高清pdf,中文版+英文版

- 李宏毅-机器学习(视频2017完整)

- 机器学习深度学习 PPT

- 麻省理工:深度学习介绍PPT-1

- 基于SVM及两类运动想象的多通道特征

- 小波包和SVM在轴承故障识别中的应用

- 林智仁教授最新版本LibSVM工具箱

- Wikipedia机器学习迷你电子书之四《D

- 台湾林教授的支持向量机libsvm

- Learning From Data Yaser S. Abu-Mostafa

- 新闻分类语料

- 北大林宙辰:机器学习一阶算法的优

- libsvm-3.20

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论