资源简介

keras实现基于语义理解的自动文摘实现;实现中文文本清洗处理,词向量引入,深度学习实现基于语义理解的中文摘要自动生成。

代码片段和文件信息

from config import *

import tensorflow as tf

from model_helper import _cell_list _single_cell

class attention_model():

def __init__(selfinputsvocab_tablereverse_vocab_table

vocab_sizeembed_size

num_units lrmodedropoutreusegrad_clip = None):

self.mode = mode

self.source = inputs.source

self.source_length = inputs.source_length

self.vocab_table = vocab_table

self.reverse_vocab_table = reverse_vocab_table

self.tgt_sos_id = tf.cast(self.vocab_table.lookup(tf.constant(SOS))tf.int32)

self.tgt_eos_id = tf.cast(self.vocab_table.lookup(tf.constant(EOS))tf.int32)

if self.mode ==‘TRAIN‘ or self.mode==‘EVAL‘:

self.target_input = inputs.target_input

self.target_output = inputs.target_output

self.target_length = inputs.target_length

self.dropout = dropout

elif self.mode == ‘INFER‘:

self.dropout = 0

else:

raise NotImplementedError

self.num_units = num_units

self.lr = lr

self.grad_clip = None

with tf.variable_scope(“Model“reuse=reuse) as scope:

with tf.variable_scope(“embedding“) as scope:

self.embedding_matrix = tf.get_variable(“shared_embedding_matrix“ [vocab_size embed_size] dtype=tf.float32)

with tf.variable_scope(“Encoder“) as scope:

self.encoder_emb_inp = tf.nn.embedding_lookup(

self.embedding_matrix self.source)

bi_inputs = self.encoder_emb_inp

fw_cells = _cell_list(num_unitsnum_layers=2dropout=self.dropout)

bw_cells = _cell_list(num_unitsnum_layers=2dropout=self.dropout)

# bi_outputs: batch_size * [L 2*num_units]

# bi_fw_state num_layers * [batch_size num_units]

# bi_bw_state num_layers * [batch_size num_units]

bi_outputs bi_fw_statebi_bw_state = tf.contrib.rnn.stack_bidirectional_dynamic_rnn(

cells_fw=fw_cells

cells_bw=bw_cells

inputs=bi_inputs

sequence_length=self.source_length

dtype = tf.float32

)

with tf.variable_scope(“Decoder“) as scope:

self.output_layer = tf.layers.Dense(vocab_size use_bias=False name=“output_projection“)

self.encoder_final_state = tuple([tf.concat((bi_fw_state[i]bi_bw_state[i])axis=-1) for i in range(2)])

self.decoder_cell = tf.contrib.rnn.MultiRNNCell(

_cell_list(num_units * 2 num_layers=2 dropout=0)

)

self.attention_mechanism = tf.contrib.seq2seq.LuongAttention(

num_units=num_units * 2

memory = bi_outputs

memory_sequence_length = self.source_length)

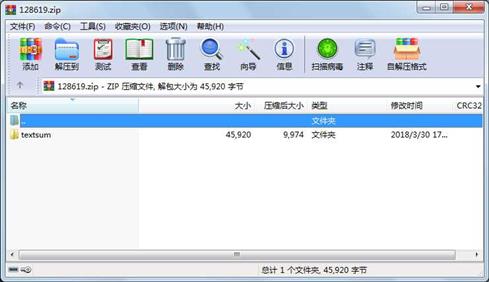

self.atten_cell = tf.contrib 属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2018-03-30 17:00 textsum\

目录 0 2018-04-03 14:43 textsum\.idea\

目录 0 2018-04-03 17:12 textsum\.idea\inspectionProfiles\

文件 227 2018-03-30 14:27 textsum\.idea\misc.xm

文件 266 2018-03-30 14:27 textsum\.idea\modules.xm

文件 398 2018-03-30 14:40 textsum\.idea\textsum.iml

文件 17597 2018-04-03 14:43 textsum\.idea\workspace.xm

文件 7380 2018-03-30 14:25 textsum\attention_model.py

文件 1401 2018-03-30 17:00 textsum\bleu.py

文件 602 2018-03-30 14:25 textsum\config.py

文件 12920 2018-03-30 14:25 textsum\main.py

文件 2611 2018-03-30 14:25 textsum\model_helper.py

文件 2518 2018-03-30 14:25 textsum\preprocess.py

- 上一篇:前台js将table转为Excel表格

- 下一篇:第十章 排序作业及答案数据结构

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论