资源简介

这是一个已经实现了的15*15的五子棋(有禁手)AI,拥有强大的对局能力,并上传源码

代码片段和文件信息

# -*- coding: utf-8 -*-

“““

@author: Junxiao Song

“““

from __future__ import print_function

import numpy as np

class Board(object):

“““board for the game“““

def __init__(self **kwargs):

self.width = int(kwargs.get(‘width‘ 8))

self.height = int(kwargs.get(‘height‘ 8))

# board states stored as a dict

# key: move as location on the board

# value: player as pieces type

self.states = {}

# need how many pieces in a row to win

self.n_in_row = int(kwargs.get(‘n_in_row‘ 5))

self.players = [1 2] # player1 and player2

def init_board(self start_player=0):

if self.width < self.n_in_row or self.height < self.n_in_row:

raise Exception(‘board width and height can not be ‘

‘less than {}‘.format(self.n_in_row))

self.current_player = self.players[start_player] # start player

# keep available moves in a list

self.availables = list(range(self.width * self.height))

self.states = {}

self.last_move = -1

def move_to_location(self move):

“““

3*3 board‘s moves like:

6 7 8

3 4 5

0 1 2

and move 5‘s location is (12)

“““

h = move // self.width

w = move % self.width

return [h w]

def location_to_move(self location):

if len(location) != 2:

return -1

h = location[0]

w = location[1]

move = h * self.width + w

if move not in range(self.width * self.height):

return -1

return move

def current_state(self):

“““return the board state from the perspective of the current player.

state shape: 4*width*height

“““

square_state = np.zeros((4 self.width self.height))

if self.states:

moves players = np.array(list(zip(*self.states.items())))

move_curr = moves[players == self.current_player]

move_oppo = moves[players != self.current_player]

square_state[0][move_curr // self.width

move_curr % self.height] = 1.0

square_state[1][move_oppo // self.width

move_oppo % self.height] = 1.0

# indicate the last move location

square_state[2][self.last_move // self.width

self.last_move % self.height] = 1.0

if len(self.states) % 2 == 0:

square_state[3][: :] = 1.0 # indicate the colour to play

return square_state[: ::-1 :]

def do_move(self move):

self.states[move] = self.current_player

self.availables.remove(move)

self.current_player = (

self.players[0] if self.current_player == self.players[1]

else self.players[1]

)

self.last_move = move

def has_a_winner(self):

width = self.width

height = self.height

states = self.states

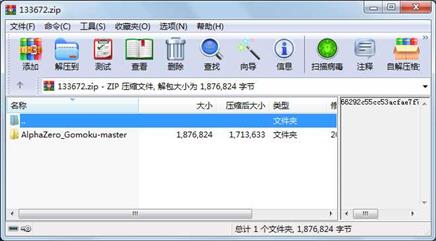

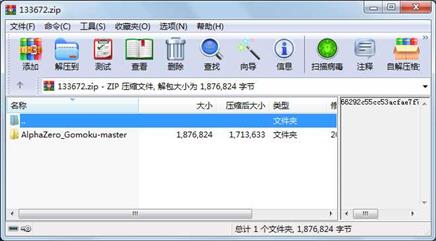

属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2018-05-16 11:45 AlphaZero_Gomoku-master\

文件 19 2018-05-16 11:45 AlphaZero_Gomoku-master\.gitignore

文件 1068 2018-05-16 11:45 AlphaZero_Gomoku-master\LICENSE

文件 3010 2018-05-16 11:45 AlphaZero_Gomoku-master\README.md

文件 417720 2018-05-16 11:45 AlphaZero_Gomoku-master\best_policy_6_6_4.model

文件 417720 2018-05-16 11:45 AlphaZero_Gomoku-master\best_policy_6_6_4.model2

文件 476972 2018-05-16 11:45 AlphaZero_Gomoku-master\best_policy_8_8_5.model

文件 476972 2018-05-16 11:45 AlphaZero_Gomoku-master\best_policy_8_8_5.model2

文件 8207 2018-05-16 11:45 AlphaZero_Gomoku-master\game.py

文件 2889 2018-05-16 11:45 AlphaZero_Gomoku-master\human_play.py

文件 7844 2018-05-16 11:45 AlphaZero_Gomoku-master\mcts_alphaZero.py

文件 7192 2018-05-16 11:45 AlphaZero_Gomoku-master\mcts_pure.py

文件 21521 2018-05-16 11:45 AlphaZero_Gomoku-master\playout400.gif

文件 5125 2018-05-16 11:45 AlphaZero_Gomoku-master\policy_value_net.py

文件 4895 2018-05-16 11:45 AlphaZero_Gomoku-master\policy_value_net_keras.py

文件 4037 2018-05-16 11:45 AlphaZero_Gomoku-master\policy_value_net_numpy.py

文件 6163 2018-05-16 11:45 AlphaZero_Gomoku-master\policy_value_net_pytorch.py

文件 6680 2018-05-16 11:45 AlphaZero_Gomoku-master\policy_value_net_tensorflow.py

文件 8790 2018-05-16 11:45 AlphaZero_Gomoku-master\train.py

- 上一篇:边缘计算参考架构3.0(2018年)

- 下一篇:LPPL 学习资料

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论