资源简介

正在学习无约束共轭梯度方法希望大家一起讨论!

代码片段和文件信息

function [s err_mse iter_time]=block_gp(xAgroupvarargin)

% block_gp: Block Gradient Pursuit algorithm (modification from [1])

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Usage

% [s err_mse iter_time]=block_gp(xPm‘option_name‘‘option_value‘)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Input

% Mandatory:

% x Observation vector to be decomposed

% P Either:

% 1) An nxm matrix (n must be dimension of x)

% 2) A function handle (type “help function_format“

% for more information)

% Also requires specification of P_trans option.

% 3) An object handle (type “help object_format“ for

% more information)

% m length of s

%

% Possible additional options:

% (specify as many as you want using ‘option_name‘‘option_value‘ pairs)

% See below for explanation of options:

%__________________________________________________________________________

% option_name | available option_values | default

%--------------------------------------------------------------------------

% stopCrit | M corr mse mse_change | M

% stopTol | number (see below) | n/4

% P_trans | function_handle (see below) |

% maxIter | positive integer (see below) | n

% verbose | true false | false

% start_val | vector of length m | zeros

% GradSteps | ‘auto‘ or integer | ‘auto‘

%

% Available stopping criteria :

% M - Extracts exactly M = stopTol elements.

% corr - Stops when maximum correlation between

% residual and atoms is below stopTol value.

% mse - Stops when mean squared error of residual

% is below stopTol value.

% mse_change - Stops when the change in the mean squared

% error falls below stopTol value.

%

% stopTol: Value for stopping criterion.

%

% P_trans: If P is a function handle then P_trans has to be specified and

% must be a function handle.

%

% maxIter: Maximum of allowed iterations.

%

% verbose: Logical value to allow algorithm progress to be displayed.

%

% start_val: Allows algorithms to start from partial solution.

%

% GradSteps: Number of gradient optimisation steps per iteration.

% ‘auto‘ uses inner products to decide if more gradient steps

% are required.

%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Outputs

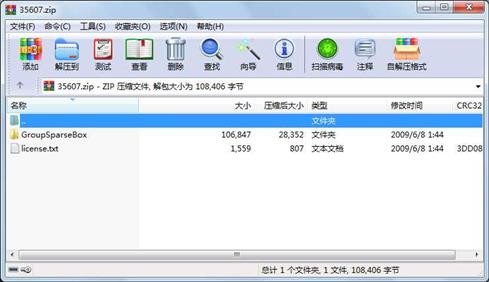

% s Soluti 属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2009-06-07 17:44 GroupSparseBox\

文件 14588 2009-01-30 15:47 GroupSparseBox\block_gp.m

文件 17851 2009-01-30 15:47 GroupSparseBox\block_nomp.m

文件 15806 2009-01-30 15:48 GroupSparseBox\block_pcgp.m

文件 856 2009-01-30 16:07 GroupSparseBox\BMP.m

文件 919 2009-01-24 22:42 GroupSparseBox\BOMP.m

文件 1218 2009-01-30 16:14 GroupSparseBox\demo.m

文件 549 2009-01-24 22:18 GroupSparseBox\fdrthresh.m

文件 878 2009-01-24 22:18 GroupSparseBox\GenGroupSparseProblem.m

文件 872 2009-01-30 16:07 GroupSparseBox\GMP.m

文件 863 2009-01-24 22:17 GroupSparseBox\GOMP.m

文件 15088 2009-01-30 16:11 GroupSparseBox\group_gp.m

文件 18126 2009-01-30 15:46 GroupSparseBox\group_nomp.m

文件 16090 2009-01-30 15:46 GroupSparseBox\group_pcgp.m

文件 106 2009-01-24 22:18 GroupSparseBox\matrix_normalizer.m

文件 1683 2009-01-24 22:18 GroupSparseBox\ReGOMP.m

文件 1354 2009-01-24 22:17 GroupSparseBox\StGOMP.m

文件 1559 2009-06-07 17:44 license.txt

相关资源

- 机械最优化设计及应用实例

- 最优化陈宝林书籍高清pdf和ppt打包

- 油田企业滚动业务发展计划最优化及

- 一类基于Armijo线搜索的新的谱共轭梯

- NP完全问题 相关

- 实用最优化方法_大连理工大学出版社

- 2018GPOPS工具、、官方手册、安装方法

- 数值最优化算法与理论

- 陆吾生最优化书籍和讲义.7z

- 最优化参考讲义上海交大参考讲义

- 最优化导论第四版答案

- 最优化理论与算法 习题解答

- 最优化讲义上海交大

- 最优化计算原理与算法程序设计

- 整数规划-讲义Xiaoling Sun 2012

- 最优化方法及其应用

- 最优化方法第二版

- 详细的组合最优化ppt课件

- 数学建模最优化方法模型及算法程序

- 最优化计算方法常用程序汇编

- 最优化理论与方法袁亚湘 孙文瑜

- 最优化原理和方法.pdf

- 最优化软件gpops

- 最优化方法课件 最优化理论与方

- 最优化理论与方法(袁亚湘)

- 最优化导论课后答案

- 数值最优化(李董辉)课后答案_第

- 组合最优化与算法第四版

- 最优化方法及其应用课后答案(郭科

- 1stopt1.5版本

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论