资源简介

马尔可夫决策过程相关Matlab工具包,包括值迭代、策略迭代等,可用于学习。

代码片段和文件信息

function [V policy] = mdp_bellman_operator(P PR discount Vprev)

% mdp_bellman_operator Applies the Bellman operator on the value function Vprev

% Returns a new value function and a Vprev-improving policy

% Arguments ---------------------------------------------------------------

% Let S = number of states A = number of actions

% P(SxSxA) = transition matrix

% P could be an array with 3 dimensions or

% a cell array (1xA) each cell containing a matrix (SxS) possibly sparse

% PR(SxA) = reward matrix

% PR could be an array with 2 dimensions or

% a sparse matrix

% discount = discount rate in ]0 1]

% Vprev(S) = value function

% Evaluation --------------------------------------------------------------

% V(S) = new value function

% policy(S) = Vprev-improving policy

% MDPtoolbox: Markov Decision Processes Toolbox

% Copyright (C) 2009 INRA

% Redistribution and use in source and binary forms with or without modification

% are permitted provided that the following conditions are met:

% * Redistributions of source code must retain the above copyright notice

% this list of conditions and the following disclaimer.

% * Redistributions in binary form must reproduce the above copyright notice

% this list of conditions and the following disclaimer in the documentation

% and/or other materials provided with the distribution.

% * Neither the name of the nor the names of its contributors

% may be used to endorse or promote products derived from this software

% without specific prior written permission.

% THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS“ AND

% ANY EXPRESS OR IMPLIED WARRANTIES INCLUDING BUT NOT LIMITED TO THE IMPLIED

% WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED.

% IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT

% INDIRECT INCIDENTAL SPECIAL EXEMPLARY OR CONSEQUENTIAL DAMAGES (INCLUDING

% BUT NOT LIMITED TO PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE

% DATA OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

% LIABILITY WHETHER IN CONTRACT STRICT LIABILITY OR TORT (INCLUDING NEGLIGENCE

% OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE EVEN IF ADVISED

% OF THE POSSIBILITY OF SUCH DAMAGE.

if discount <= 0 | discount > 1

disp(‘--------------------------------------------------------‘)

disp(‘MDP Toolbox ERROR: Discount rate must be in ]0; 1]‘)

disp(‘--------------------------------------------------------‘)

elseif ((iscell(P)) & (size(Vprev) ~= size(P{1}1)))

disp(‘--------------------------------------------------------‘)

disp(‘MDP Toolbox ERROR: Vprev must have the same dimension as P‘)

disp(‘--------------------------------------------------------‘)

elseif ((~iscell(P)) & (size(Vprev) ~= size(P1)))

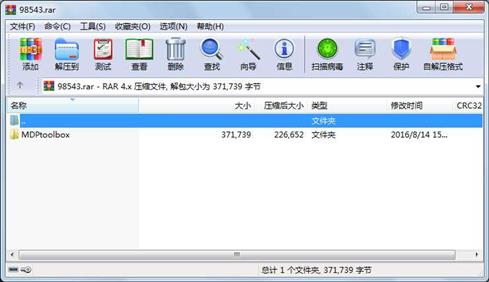

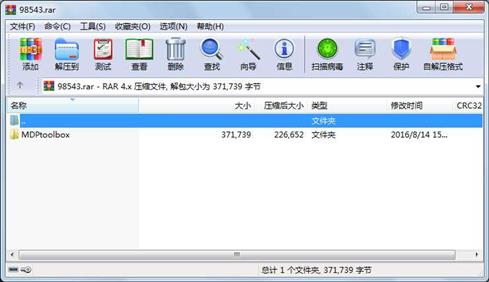

属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

文件 63 2009-11-09 18:08 MDPtoolbox\AUTHORS

文件 1563 2009-11-09 18:08 MDPtoolbox\COPYING

文件 231 2009-11-09 18:08 MDPtoolbox\documentation\arrow.gif

文件 6876 2009-11-09 18:08 MDPtoolbox\documentation\BIA.png

文件 3110 2009-11-09 18:08 MDPtoolbox\documentation\DOCUMENTATION.html

文件 6469 2009-11-09 18:08 MDPtoolbox\documentation\index_alphabetic.html

文件 7022 2009-11-09 18:08 MDPtoolbox\documentation\index_category.html

文件 134450 2009-11-09 18:08 MDPtoolbox\documentation\INRA.png

文件 3276 2009-11-09 18:08 MDPtoolbox\documentation\mdp_bellman_operator.html

文件 2959 2009-11-09 18:08 MDPtoolbox\documentation\mdp_check.html

文件 2465 2009-11-09 18:08 MDPtoolbox\documentation\mdp_check_square_stochastic.html

文件 3357 2009-11-09 18:08 MDPtoolbox\documentation\mdp_computePpolicyPRpolicy.html

文件 2885 2009-11-09 18:08 MDPtoolbox\documentation\mdp_computePR.html

文件 7506 2009-11-09 18:08 MDPtoolbox\documentation\mdp_eval_policy_iterative.html

文件 2984 2009-11-09 18:08 MDPtoolbox\documentation\mdp_eval_policy_matrix.html

文件 3691 2009-11-09 18:08 MDPtoolbox\documentation\mdp_eval_policy_optimality.html

文件 3363 2009-11-09 18:08 MDPtoolbox\documentation\mdp_eval_policy_TD_0.html

文件 6834 2009-11-09 18:08 MDPtoolbox\documentation\mdp_example_forest.html

文件 3833 2009-11-09 18:08 MDPtoolbox\documentation\mdp_example_rand.html

文件 4160 2009-11-09 18:08 MDPtoolbox\documentation\mdp_finite_horizon.html

文件 3245 2009-11-09 18:08 MDPtoolbox\documentation\mdp_LP.html

文件 4940 2009-11-09 18:08 MDPtoolbox\documentation\mdp_policy_iteration.html

文件 4966 2009-11-09 18:08 MDPtoolbox\documentation\mdp_policy_iteration_modified.html

文件 4185 2009-11-09 18:08 MDPtoolbox\documentation\mdp_Q_learning.html

文件 7733 2009-11-09 18:08 MDPtoolbox\documentation\mdp_relative_value_iteration.html

文件 2082 2009-11-09 18:08 MDPtoolbox\documentation\mdp_span.html

文件 6731 2009-11-09 18:08 MDPtoolbox\documentation\mdp_value_iteration.html

文件 8689 2009-11-09 18:08 MDPtoolbox\documentation\mdp_value_iterationGS.html

文件 3580 2009-11-09 18:08 MDPtoolbox\documentation\mdp_value_iteration_bound_iter.html

文件 2446 2009-11-09 18:08 MDPtoolbox\documentation\mdp_verbose_silent.html

............此处省略30个文件信息

- 上一篇:太阳能电池matlabm模型

- 下一篇:MATLAB实现AM调制

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论