资源简介

该压缩包为Attention is all you need,里面包括tensorflow以及keras版本的代码,还有论文Attention is all you need,性价比很高,欢迎大家一起学习!

代码片段和文件信息

#! -*- coding: utf-8 -*-

from keras import backend as K

from keras.engine.topology import layer

class Position_embedding(layer):

def __init__(self size=None mode=‘sum‘ **kwargs):

self.size = size #必须为偶数

self.mode = mode

super(Position_embedding self).__init__(**kwargs)

def call(self x):

if (self.size == None) or (self.mode == ‘sum‘):

self.size = int(x.shape[-1])

batch_sizeseq_len = K.shape(x)[0]K.shape(x)[1]

position_j = 1. / K.pow(10000. \

2 * K.arange(self.size / 2 dtype=‘float32‘ \

) / self.size)

position_j = K.expand_dims(position_j 0)

position_i = K.cumsum(K.ones_like(x[::0]) 1)-1 #K.arange不支持变长,只好用这种方法生成

position_i = K.expand_dims(position_i 2)

position_ij = K.dot(position_i position_j)

position_ij = K.concatenate([K.cos(position_ij) K.sin(position_ij)] 2)

if self.mode == ‘sum‘:

return position_ij + x

elif self.mode == ‘concat‘:

return K.concatenate([position_ij x] 2)

def compute_output_shape(self input_shape):

if self.mode == ‘sum‘:

return input_shape

elif self.mode == ‘concat‘:

return (input_shape[0] input_shape[1] input_shape[2]+self.size)

class Attention(layer):

def __init__(self nb_head size_per_head **kwargs):

self.nb_head = nb_head

self.size_per_head = size_per_head

self.output_dim = nb_head*size_per_head

super(Attention self).__init__(**kwargs)

def build(self input_shape):

self.WQ = self.add_weight(name=‘WQ‘

shape=(input_shape[0][-1] self.output_dim)

initializer=‘glorot_uniform‘

trainable=True)

self.WK = self.add_weight(name=‘WK‘

shape=(input_shape[1][-1] self.output_dim)

initializer=‘glorot_uniform‘

trainable=True)

self.WV = self.add_weight(name=‘WV‘

shape=(input_shape[2][-1] self.output_dim)

initializer=‘glorot_uniform‘

trainable=True)

super(Attention self).build(input_shape)

def Mask(self inputs seq_len mode=‘mul‘):

if seq_len == None:

return inputs

else:

mask = K.one_hot(seq_len[:0] K.shape(inputs)[1])

mask = 1 - K.cumsum(mask 1)

for _ in range(len(inputs.shape)-2):

mask = K.expand_dims(mask 2)

if mode == ‘mul‘:

return inputs * mask

if mode == ‘add‘:

return inputs - (1 - mask) * 1e12

def call(self x):

#如果只传入Q_seqK_seqV_seq,那么就不做Mask

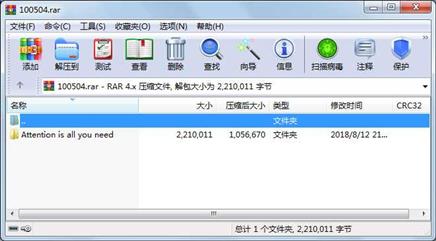

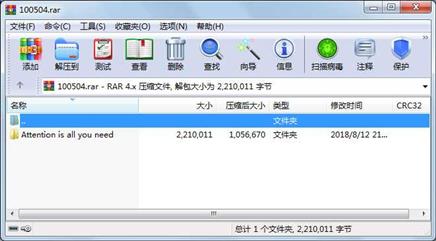

#如果同时传入Q_se 属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

....... 4578 2018-03-28 02:27 Attention is all you need\Attention is all you need\attention_keras.py

....... 3598 2018-03-28 02:27 Attention is all you need\Attention is all you need\attention_tf.py

....... 135 2018-03-28 02:27 Attention is all you need\Attention is all you need\README.md

文件 2201700 2018-08-05 16:12 Attention is all you need\Attention is all you need.pdf

目录 0 2018-03-28 02:27 Attention is all you need\Attention is all you need

目录 0 2018-08-12 21:22 Attention is all you need

----------- --------- ---------- ----- ----

2210011 6

- 上一篇:迷宫程序VC图形化界面

- 下一篇:文件列表生成器,生成Excel列表,自动链接

相关资源

- Pythonamp;课堂amp;笔记(高淇amp;400;集第

- Python中Numpy库最新教程

- 用python编写的移动彩信的发送程序

- Python全栈学习笔记面向对象大作业:

- python实现的ftp自动上传、下载脚本

- Python版的A*寻路算法

- IronPython IDE

- TensorFlow1.4 官方手册

- pip-10.0.1.tar.gz

- Data Science from Scratch 2nd Edition

- shape_predictor_68_face_landmarks.dat.bz2 68个标

- 爬取豆瓣电影TOP250程序,包含非常详

- 中文维基百科语料库百度网盘网址.

- MSCNN_dehaze.rar

- 基于双向LSTM+tensorflow中文分词

- 爬取豆瓣排行榜电影数据(含GUI界面

- 字典文本资源

- Brainfuck / OoK 解码脚本

- 案例实战信用卡欺诈检测数据集

- TensorFlow Machine Learning Cookbook+无码高清

- Hands-On Machine Learning with Scikit-Learn an

- TensorFlow 官方文档中文版高清无码PD

- 招商策略_抱团启示录那些年我们一起

- sip-4.19.zip

- 树莓派3b+学习使用教程

- numpy 中文学习手册

- 卷积神经网络的人脸识别样本采集+

- pytorch-1.4.0-py3.7_cpu_0.tar.bz2

- 机器学习实战 高清完整版PDF

- 泰坦尼克号0.81准确率实验报告.docx

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论