-

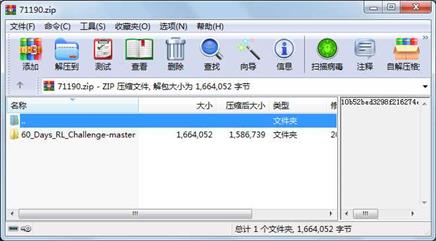

大小: 1.52MB文件类型: .zip金币: 2下载: 0 次发布日期: 2023-08-30

- 语言: Python

- 标签:

资源简介

我为了你我设计这个挑战:在这60天里深入学习“深度强化学习”。

代码片段和文件信息

import gym

import random

from collections import namedtuple

import collections

import numpy as np

import matplotlib.pyplot as plt

def select_eps_greedy_action(table obs n_actions):

‘‘‘

Select the action using a ε-greedy policy (add a randomness ε for the choice of the action)

‘‘‘

value action = best_action_value(table obs)

if random.random() < epsilon:

return random.randint(0 n_actions - 1)

else:

return action

def select_greedy_action(table obs n_actions):

‘‘‘

Select the action using a greedy policy (take the best action according to the policy)

‘‘‘

value action = best_action_value(table obs)

return action

def best_action_value(table state):

‘‘‘

Exploring the table take the best action that maximize Q(sa)

‘‘‘

best_action = 0

max_value = 0

for action in range(n_actions):

if table[(state action)] > max_value:

best_action = action

max_value = table[(state action)]

return max_value best_action

def Q_learning(table obs0 obs1 reward action):

‘‘‘

Q-learning. Update Q(obs0action) according to Q(obs1*) and the reward just obtained

‘‘‘

# Take the best value reachable from the state obs1

best_value _ = best_action_value(table obs1)

# Calculate Q-target value

Q_target = reward + GAMMA * best_value

# Calculate the Q-error between the target and the previous value

Q_error = Q_target - table[(obs0 action)]

# Update Q(obs0action)

table[(obs0 action)] += LEARNING_RATE * Q_error

def test_game(env table n_actions):

‘‘‘

Test the new table playing TEST_EPISODES games

‘‘‘

reward_games = []

for _ in range(TEST_EPISODES):

obs = env.reset()

rewards = 0

while True:

# Act greedly

next_obs reward done _ = env.step(select_greedy_action(table obs n_actions))

obs = next_obs

rewards += reward

if done:

reward_games.append(rewards)

break

return np.mean(reward_games)

# Some hyperparameters..

GAMMA = 0.95

# NB: the decay rate allow to regulate the Exploration - Exploitation trade-off

# start with a EPSILON of 1 and decay until reach 0

epsilon = 1.0

EPS_DECAY_RATE = 0.9993

LEARNING_RATE = 0.8

# .. and constants

TEST_EPISODES = 100

MAX_GAMES = 15001

# Create the environment

# env = gym.make(‘Taxi-v2‘)

env = gym.make(“FrozenLake-v0“)

obs = env.reset()

obs_length = env.observation_space.n

n_actions = env.action_space.n

reward_count = 0

games_count = 0

# Create and initialize the table with 0.0

table = collections.defaultdict(float)

test_rewards_list = []

while games_count < MAX_GAMES:

# Select the action following an ε-greedy policy

action = select_eps_greedy_action(table obs n_actions)

next_obs reward done _ = env.step(action)

# Update the Q-table

Q_learning(table obs next_obs reward action)

reward_count += reward

obs = next_obs

if done:

epsilon *= EPS_DECAY_RATE

# Test the new ta属性 大小 日期 时间 名称

----------- --------- ---------- ----- ----

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\

文件 1203 2018-09-15 09:48 60_Days_RL_Challenge-master\.gitignore

文件 1075 2018-09-15 09:48 60_Days_RL_Challenge-master\LICENSE

文件 7868 2018-09-15 09:48 60_Days_RL_Challenge-master\README.md

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\

文件 47757 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\frozenlake_Qlearning.ipynb

文件 3465 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\frozenlake_Qlearning.py

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\img\

文件 60162 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\img\Q_function.png

文件 88665 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\img\frozenlake_v0.png

文件 19080 2018-09-15 09:48 60_Days_RL_Challenge-master\Week2\img\short_diag.jpg

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\

文件 5205 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\README.md

文件 4258 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\agent.py

文件 5665 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\atari_wrappers.py

文件 1860 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\buffers.py

文件 3522 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\central_control.py

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\

文件 106449 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\DQN_variations.png

文件 21564 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\Dueling_img.png

文件 8790 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\double_Qlearning_formula.png

文件 14527 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\multistep_formula.png

文件 8952 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\noisenet_formula.png

文件 469317 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\imgs\pong_gif.gif

文件 2184 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\main.py

文件 4661 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\neural_net.py

文件 395 2018-09-15 09:48 60_Days_RL_Challenge-master\Week3\utils.py

目录 0 2018-09-15 09:48 60_Days_RL_Challenge-master\images\

文件 716068 2018-09-15 09:48 60_Days_RL_Challenge-master\images\logo5.png

文件 61360 2018-09-15 09:48 60_Days_RL_Challenge-master\images\logo6.png

相关资源

- Python-DeepMoji模型的pyTorch实现

- Python-使用DeepFakes实现YouTube视频自动换

- Python-一系列高品质的动漫人脸数据集

- Python-Insightface人脸检测识别的最小化

- Python-自然场景文本检测PSENet的一个

- Python-在特征金字塔网络FPN的Pytorch实现

- Python-PyTorch实时多人姿态估计项目的实

- Python-用PyTorch10实现FasterRCNN和MaskRCNN比

- Python-心脏核磁共振MRI图像分割

- Python-基于YOLOv3的行人检测

- Python-RLSeq2Seq用于SequencetoSequence模型的

- Python-PyTorch对卷积CRF的参考实现

- Python-高效准确的EAST文本检测器的一个

- Python-pytorch实现的人脸检测和人脸识别

- Python-UNet用于医学图像分割的嵌套UN

- Python-TensorFlow弱监督图像分割

- Python-基于tensorflow实现的用textcnn方法

- Python-Keras实现Inceptionv4InceptionResnetv1和

- Python-pytorch中文手册

- Python-FastSCNN的PyTorch实现快速语义分割

- Python-滑动窗口高分辨率显微镜图像分

- Python-使用MovieLens数据集训练的电影推

- Python-机器学习驱动的Web应用程序防火

- Python-subpixel利用Tensorflow的一个子像素

-

Python-汉字的神经风格转移Neuralst

y - Python-神经网络模型能够从音频演讲中

- Python-深度增强学习算法的PyTorch实现策

- Python-基于深度学习的语音增强使用

- Python-基于知识图谱的红楼梦人物关系

- Python-STGAN用于图像合成的空间变换生

川公网安备 51152502000135号

川公网安备 51152502000135号

评论

共有 条评论